By an industry veteran who’s seen the promise and pitfalls of enterprise graph analytics firsthand

Introduction: The Promise and Perils of Enterprise Graph Analytics

Graph analytics has emerged as a revolutionary approach to uncovering complex relationships and patterns in data, especially in domains like supply chain optimization, fraud detection, and network analysis. Yet, despite the hype, the reality of enterprise graph analytics failures and the graph database project failure rate remains sobering. Why do so many graph analytics projects fail? What are the common enterprise graph implementation mistakes that trip up even well-funded initiatives?. importantly, how do solutions like IBM Graph DB stack up in production environments against competitors such as Neo4j or Amazon Neptune?

Drawing on 18 months of production experience with IBM Graph DB, this article shares candid insights into power10 supply chain insights real-world challenges, strategies for petabyte-scale graph data processing, supply chain optimization use cases, and the critical ROI analysis that business leaders can’t afford to overlook.

Enterprise Graph Analytics Implementation Challenges: Lessons from the Trenches

Enterprise graph analytics is not just a technology plug-and-play — it’s a deeply involved journey that demands alignment across technical, architectural, and organizational dimensions. Here are some of the toughest hurdles experienced during IBM Graph DB's implementation:

- Graph Schema Design Mistakes: One of the top causes of enterprise graph implementation mistakes is poorly designed graph schemas. Overly complex or shallow models create bottlenecks in query performance and scalability. It’s crucial to apply graph modeling best practices to balance normalization with efficient traversal paths. Performance Bottlenecks & Slow Graph Database Queries: As datasets grew toward petabyte scale, query latency became a serious issue. Without rigorous graph query performance optimization and graph database query tuning, traversal. join operations slowed down, frustrating users and stakeholders alike. Scaling to Petabyte-Scale Graph Data: Handling petabyte-scale graph datasets introduces not just storage challenges but also complex distributed processing demands. IBM Graph DB required careful engineering to avoid exponential growth in traversal times, demanding custom graph traversal performance optimization. leveraging advanced indexing strategies. Integration with Existing Data Platforms: Enterprises often have sprawling data landscapes. Integrating IBM Graph DB with legacy relational databases, data lakes, and ETL pipelines required additional middleware. orchestration to maintain data consistency and freshness. Skill Gaps & Organizational Alignment: The graph paradigm is still emerging; many teams initially underestimated the learning curve. Without proper training and clear governance, graph analytics project failure rates spike dramatically.

These challenges are not unique to IBM — similar patterns are seen across enterprise graph database benchmarks. in comparisons with Neo4j and Amazon Neptune. However, IBM’s integrated tooling and enterprise-grade support have often mitigated risks better than open-source alternatives.

Supply Chain Optimization with Graph Databases: A Game Changer

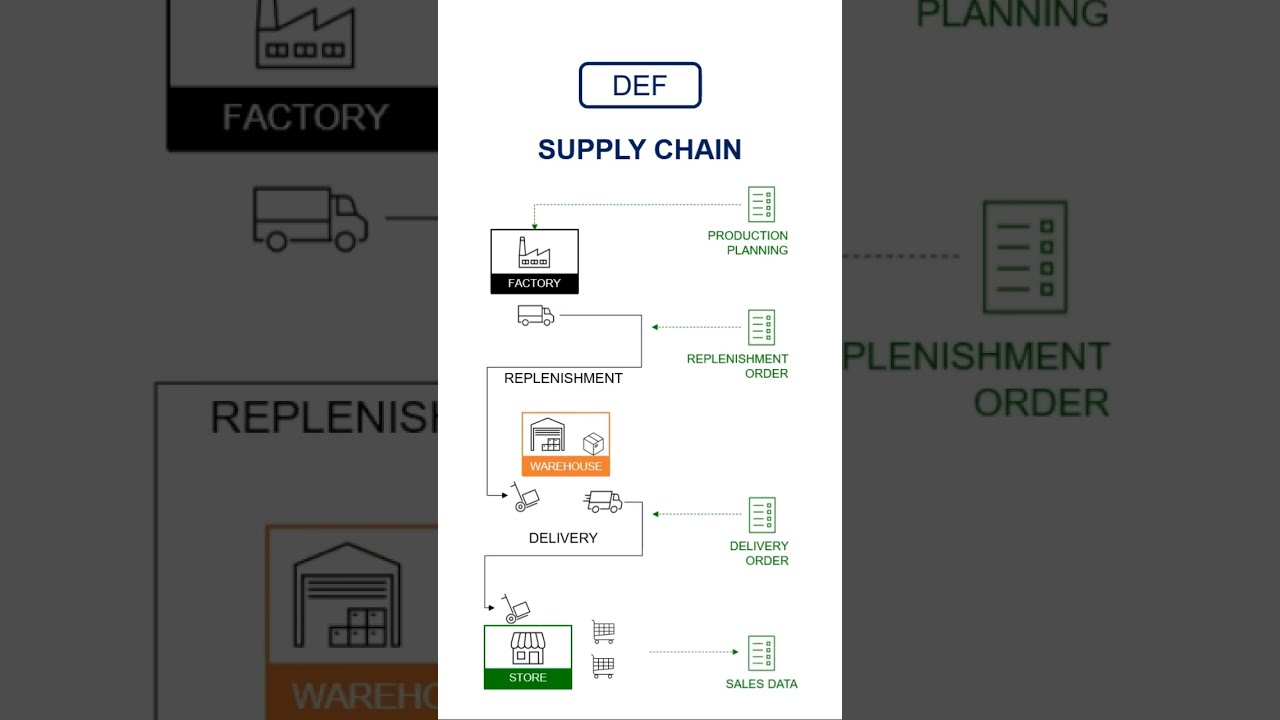

Supply chain networks are inherently graph-shaped: suppliers, manufacturers, warehouses, distributors,. retailers interconnected through complex, dynamic relationships. Applying supply chain analytics with graph databases enables businesses to:

- Identify hidden dependencies. single points of failure Optimize inventory placement and logistics routing Simulate disruption scenarios with real-time graph traversal Analyze supplier risk propagation and cascading impacts

IBM Graph DB’s production experience highlighted that graph database supply chain optimization delivers tangible business value, especially when combined with machine learning for predictive insights. However, success depends heavily on:

- Robust enterprise graph schema design to model multi-tier supplier relationships accurately Efficient supply chain graph query performance to support near real-time decision making Integration with ERP. IoT platforms for continuous data updates

Comparing vendors, IBM stood out for its advanced analytics integration and enterprise-grade security, whereas Neo4j excelled in developer agility and community support. Evaluations like supply chain analytics platform comparison and graph analytics vendor evaluation consistently underline the importance of matching platform capabilities to specific business needs.

Strategies for Petabyte-Scale Data Processing in Graph Analytics

Scaling graph databases to petabyte volumes is no trivial feat. Large-scale graph analytics performance is often constrained by traversal complexity and distributed system overhead. IBM’s production deployment taught us several critical lessons:

Distributed Graph Storage & Sharding: Intelligent partitioning of graph data based on community detection. relationship density helps localize traversals and minimize cross-node communication. Incremental Updates & Graph Streaming: Maintaining freshness without full reloads reduces compute costs and latency. Leveraging event-driven pipelines to update graph overlays is essential. Query Optimization & Caching: Precomputing common traversal paths. applying advanced graph database query tuning reduces repeated expensive operations. Leverage Hardware Acceleration: Utilizing GPUs and in-memory processing where possible accelerates complex traversal and pattern matching at scale. Cloud-Native Architectures: Deploying on elastic cloud platforms enables dynamic resource scaling to meet variable workloads, balancing performance with cost.When comparing IBM vs Neo4j performance or evaluating Amazon Neptune vs IBM Graph, these architectural choices strongly influence results. IBM’s hybrid cloud and container orchestration capabilities often provide superior resilience. throughput at scale, albeit sometimes at a higher petabyte graph database performance cost.

ROI Analysis: Making Sense of Enterprise Graph Analytics Investments

With the complexity and costs involved, stakeholders rightly demand clear visibility into the enterprise graph analytics ROI. Calculating return on investment requires looking beyond initial graph database implementation costs. factoring in:

- Operational Efficiency Gains: Reduced supply chain disruptions, faster fraud detection, and improved customer insights translate into measurable savings. Time to Insight: Faster and more flexible querying enables better, proactive business decisions. Cost of Scale: Understanding petabyte data processing expenses and associated infrastructure costs is vital to avoid budget overruns. Opportunity Cost of Failures: Mitigating risks of enterprise graph analytics failures through proper planning reduces wasted investments.

IBM’s 18 months of production use allowed us to refine an ROI calculation model combining analytics-driven KPIs with cost data. The key takeaways include:

- Initial platform investments tend to be recouped within 18-24 months in supply chain optimization scenarios through efficiency improvements. Continuous operational expenses, especially at petabyte scale, must be carefully managed through query optimization. workload tuning. Comparing enterprise graph analytics pricing across vendors reveals substantial variability; IBM’s pricing reflects its enterprise-grade SLAs but may be higher than open-source alternatives. Successful, profitable graph database projects require close collaboration between data engineers, business analysts, and IT leadership.

Ultimately, a graph analytics implementation case study such as this underscores the business value of mature enterprise graph solutions when deployed with discipline and care.

you know,IBM Graph Analytics vs Neo4j and Amazon Neptune: Performance and Practical Considerations

Comparing IBM Graph DB to Neo4j and Amazon Neptune requires a nuanced look at various factors:

Feature / Metric IBM Graph DB Neo4j Amazon Neptune Enterprise Support & SLAs Robust, IBM-grade support and integration Strong community, commercial support via Aura AWS-managed, tight cloud integration Graph Schema Flexibility Strong schema enforcement & optimization Highly flexible, property graph model Supports RDF & property graph Petabyte-Scale Performance Advanced sharding, optimized traversal, strong scaling Good for medium scale, some sharding limitations Elastic cloud scaling, but query latency varies Query Language Support Gremlin, SPARQL, custom optimizations Cypher, Gremlin (some support) SPARQL, Gremlin Pricing Model Enterprise licensing + usage fees Subscription & cloud service pricing Pay-as-you-go, AWS pricing model Cloud-Native Deployment Hybrid cloud, Kubernetes support Cloud-hosted (Aura), on-premises options Fully managed AWS cloud service Query Performance Optimization Advanced tuning tools, caching layers Query profiling, APOC procedures Basic tuning, limited by cloud constraintsThese differences explain why IBM Graph DB often outperforms Neo4j and Neptune in demanding enterprise environments requiring petabyte-scale graph processing, especially when coupled with stringent SLA and security requirements.

Best Practices for Successful Enterprise Graph Analytics Implementation

From my experience leading IBM Graph DB deployments, here are some proven strategies to avoid common pitfalls and maximize business value:

- Invest in Graph Schema Design Early: Avoid the trap of “just throwing data in” by collaborating closely with domain experts to build a schema that supports efficient traversal and accurate business logic. Implement Continuous Query Performance Monitoring: Regularly identify slow graph database queries and apply targeted graph traversal performance optimization to keep latency low. Adopt Incremental and Stream-Based Graph Updates: Minimize downtime and reduce petabyte scale graph traversal cost by updating only changed portions of the graph. Leverage Cloud and Hybrid Deployments: Use elastic resources to balance cost and performance, especially for peak analytic workloads. Build Cross-Functional Teams: Ensure alignment between data engineers, business analysts, and IT to avoid enterprise graph schema design mismatches and implementation delays. Run Pilot Projects for ROI Validation: Demonstrate measurable graph analytics supply chain ROI or other KPIs before scaling broadly.

Following these guidelines helps transition a graph database project from a risky experiment to a profitable enterprise asset.

Conclusion: IBM Graph DB’s Production Experience – Hard-Won Insights

After 18 months of production use, IBM Graph DB has proven itself as a powerful player for enterprises tackling complex, large-scale graph analytics challenges. The journey was not without stumbles—common enterprise graph analytics failures taught us invaluable lessons around schema design, performance tuning,. operational scaling.

In supply chain optimization, graph analytics unlocked new business value by revealing hidden risks and enabling smarter decisions. At petabyte scale, careful engineering and query optimization were essential to keep performance within acceptable bounds. control costs.

Comparisons with Neo4j and Amazon Neptune underscore that no single platform fits all needs; IBM’s enterprise strengths shine in demanding, security-sensitive, and large-scale contexts, albeit with a premium pricing model.

Ultimately, successful enterprise graph analytics implementation depends on blending technical rigor with clear business alignment, continuous performance tuning, and realistic ROI modeling. For companies ready to invest with eyes wide open, IBM Graph DB offers a mature, battle-tested platform to harness the true power of graph analytics.

Author: A seasoned graph analytics architect with extensive experience in enterprise deployments. performance optimizations.

```